Overview

K8s, Containers + Orchestration

Containers Overview

- Containers avoid

- Compatibility/Dependency problems

- Long setup time

- Different Dev/Test/Prod environments

- Share the underlying Kernel/OS

- Purpose is to containerize applications and run them

- Containers vs VMs

- VMs run a separate OS for eac application while containers share the same OS for different apps

- Containers vs image

- image is the container template plan. Each container is an instance of it

Container Orchestration

- Multiple instances of an application on different nodes

- Load balancing features

- Scaling configurations

- Used for deployment of many clustered architectures

Kubernetes Architecture

- Nodes (Minions)

- Cluster set of nodes

- Master node is responsible for the config of other nodes and interchanging

- Kubernetes Components

- API Server (frontend for Kubernetes)

- Scheduler (distributing work across multiple nodes)

- Controller (brain behind orchestration makes decisions)

- Container Runtime (e.g Docker, responsible for running application in containers)

- Kubelet (agent, responsible to make sure the containers are running on expected nodes)

- etcd (key-value store in distributed manner, ensure there is no conflict)

- Master vs Worker nodes

- Master

- kuber-apiserver

- etcd

- controller

- scheduler

- Worker

- kubelet agent

- Container runtime

- Master

- Kubectl (kube control)

- kubectl run hello-minikube

- kubectl cluster-info

- kubectl get nodes

Introduction and Minikube Reference

Please find some links to Kubernetes Documentation Site below:

Kubernetes Documentation Site: https://kubernetes.io/docs/

Kubernetes Documentation Concepts: https://kubernetes.io/docs/concepts/

Kubernetes Documentation Setup: https://kubernetes.io/docs/setup/

Kubernetes Documentation – Minikube Setup: https://kubernetes.io/docs/getting-started-guides/minikube/

Kubeadm Setup part 1 VMs and Pre-Requisites Reference

Oracle VirtualBox: https://www.virtualbox.org/

Link to download VM images: http://osboxes.org/

Link to kubeadm installation instructions: https://kubernetes.io/docs/setup/independent/install-kubeadm/

Important Notice!!

If you are following along with your own setup, make sure to use the below command for setting up Networking with Flannel. The command in the Kubernetes Documentation web site seems to be having an issue.

kubectl create -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Kubeadm Setup part 2 Configure Cluster with kubeadm Reference

Kubernetes Concepts

PODs

- kubernetes does not deploy containers directly on the worker nodes

- Containers are encapsulated in PODs (single instance of application), smallest objects in Kubernetes

- POD has a 1:1 relationship with a container type, scaling goes accordingly (deploy additional pods to scale the app)

- a POD can have different containers if they are different types of containers. a POD can not have multiple containers of the same kind. (e.g helper container supporting the main container).

- Deploy pods with kubectl run nginx

- –image parameter available

- kubectl get pods

- each pod get its own internal IP address

src: Kubernetes for the Absolute Beginners course

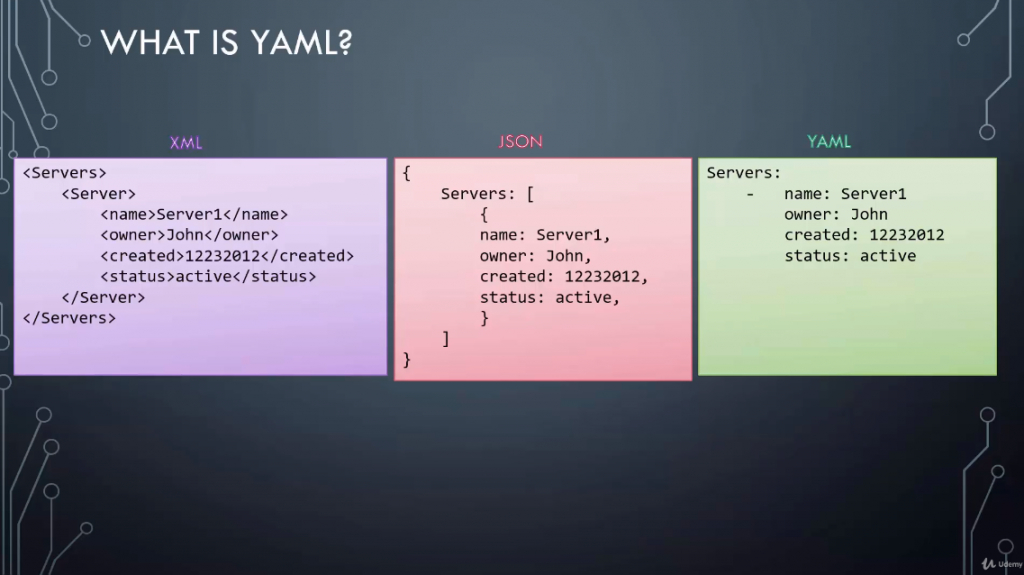

YAML

- Key Value Pair data format (similar to xml and json but simpler)

- ‘-‘ dash indicates an element of an array

- Equal number of spaces represent dictionary/map

- Dictionary vs List vs List of Dictionaries

- Dictionary represents properties of an object

- via key-value pairs format

- there could be a dictionary within a dictionary

- e.g model of a car can have 2 properties such as name and year

- List for multiple items of the same object

- List of dictionaries for all information about multiple items/instances of the same object

- Dictionary = unordered collection

- List = ordered collection

- Properties can be defined in any order in a Dictionary

- The same cannot be said about array/list

- Dictionary represents properties of an object

e.g Payslips is a list of dictionaries within a dictionary Employee:

Employee:

Name: Jacob

Sex: Male

Age: 30

Title: Systems Engineer

Projects:

- Automation

- Support

Payslips:

- Month: June

Wage: 4000

- Month: July

Wage: 4500

- Month: August

Wage: 4000

Kubernetes Concepts PODs, ReplicaSets, Deployments

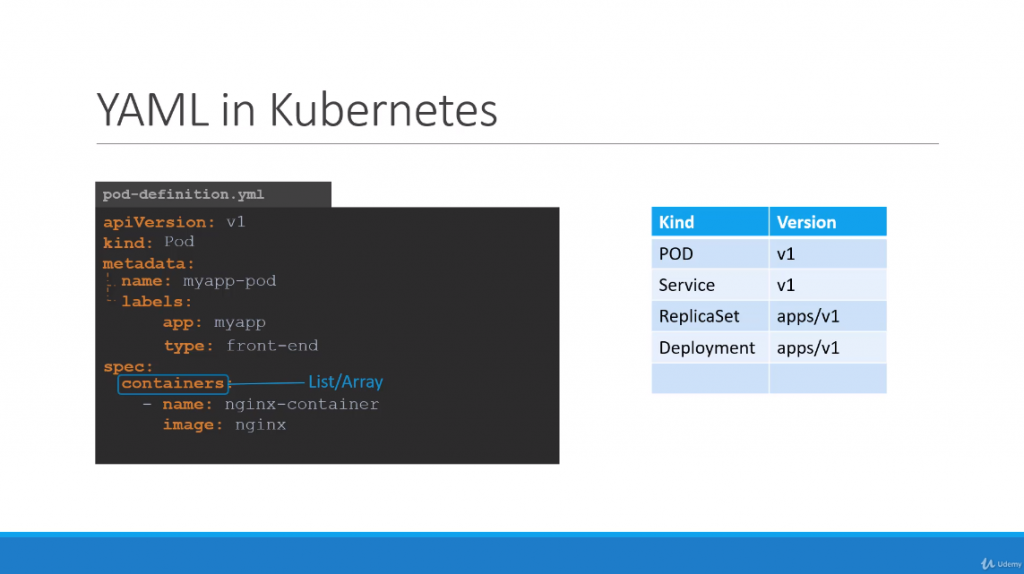

PODs with YAML

- Kubernetes uses yaml for inputs as creation of objects in pod configuration files

- 4 main properties in the conf file (root level properties)

- apiVersion, kind (: Pod), metadata, spec

- kubectl create -f pod-definition.yml

- kubectl get pods

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: nginx

image: nginx

Replication Controllers and ReplicaSets

- Helps run multiple instances of a single pod in case of failure

- Deploy additional pods when demand increases (number of users)

- Replication controller spans across multiple nodes in a cluster

- Being replaced by Replica Set

- How to create a replication controller

- rc-definition.yml

- kind: ReplicationController

- metadata -> labels -> type: front-end

- spec -> template: POD data (except for the apiVersion and kind)

- spec -> replicas: 3

- kubectl create -f rc-definition.yml

- kubectl get replicationcontroller

- How to create a replica set (differences with replication controller)

- apiVersion: apps/v1

- kind: ReplicaSet

- + spec -> selector -> matchLabels -> type: front-end

- kubectl create -f replicaset-definition.yml

- Labels and Selectors

- Replicaset can monitor pods with labeling filter

- e.g Ensure there is a minimum of 3 running at all time

- How to update to scale

- Update the replicas to 6:

- kubectl replace -f replicaset-definition.yml or

- kubectl scale –replicas=6 -f replicaset-definition.yml or

- kubectl scale –replicas=6 replicaset myapp-replicaset

- Automatically scale based on load is possible

Sample of a replicaset-definition.yml:

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: frontend

labels:

app: mywebsite

tier: frontend

spec:

replicas: 4

template:

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: nginx

image: nginx

selector:

matchLabels:

app: myapp

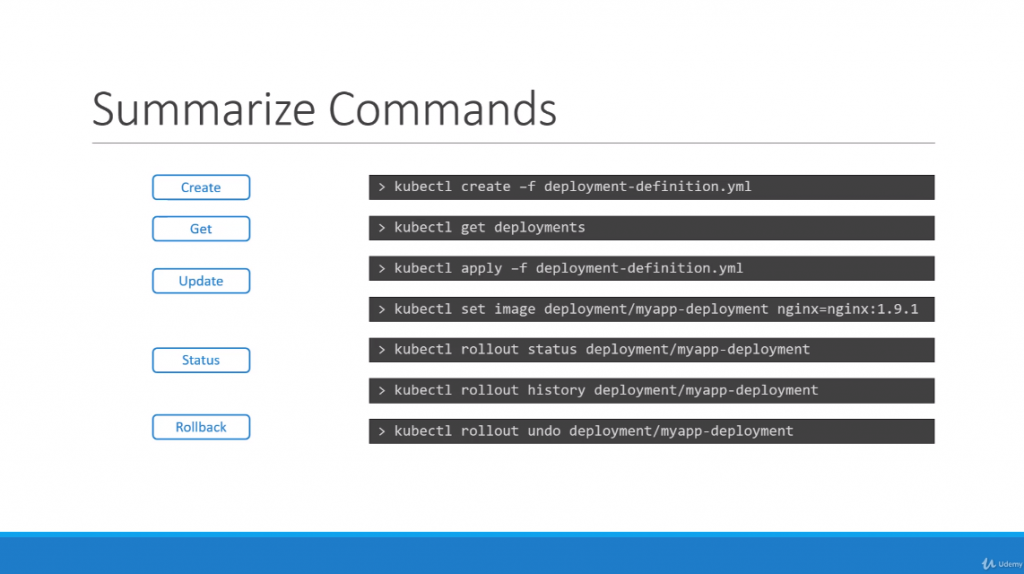

Kubernetes Deployment commands

Sample of a deployment-definition.yml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

labels:

app: mywebsite

tier: frontend

spec:

replicas: 4

template:

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: nginx

image: nginx

selector:

matchLabels:

app: myapp

Networking in Kubernetes

- Kubernetes does not handle network fundamentals. Operators must make sure the basic requirements are met:

- Cluster Networking requirements

- All containers/PODs can communicate to one another without NAT

- All nodes can communicate with all containers and vice versa without NAT

- Some tools to help: Cisco, cilium, flannel, NSX, VMware

- Each container must then be assigned a proper IP address so that communication within the cluster is permitted

Services

- Service NodePort listens to a port on the node and forward request to the POD. This way one can access the POD directly from a laptop, outside of the node. Mapping specifics:

- port of the POD is called the TargetPort (80).

- port of the Service is referred as Port (80)

- port of the node is called NodePort

- range from 30000 to 32767

- definition file via yaml, e.g service-definition.yml

- apiVersion: v1

- kind: Service

- metadata:

- name: myapp-service

- spec:

- type: NodePort

- ports:

- – targetPort: 80

- port: 80

- nodePort: 30008

- selector:

- app: myapp

- type: frontend

- notice how selector pulls the labels used to create the POD in the first place. If multiple PODs have the same label, e.g myapp, the service NodePort will add all of them as endpoints to forward the external request coming from the user.

- commands:

- kubectl create -f service-definition.yml

- kubectl get services

- Service ClusterIP

- type: ClusterIP

Sample of a service-definition.yml:

apiVersion: v1

kind: Service

metadata:

name: frontend

labels:

app: myapp

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

selector:

app: myapp

Microservices Architecture

A sample end to end app using:

- voting-app

- redis, in-memory DB

- worker in .net

- -> result-app

- python, node.js, docker and other

with commands:

- docker run -d –name=redis redis

- docker run -d –name=db postgres:9.4

- docker run -d –name=vote -p 5000:80 –link redis:redis voting-app

- docker run -d –name=result -p 5001:80 –link db:db result-app

- docker run -d –name=worker –link db:db –link redis:redis worker

note: the usage of –link is deprecated, check on the latest from docker for easier linking.

Services in Voting Application Explained

Below is some additional clarification on services in the Example Voting Application:

Think of a service as a load-balancer. That receives request on the front-end and forwards it to the backend pods. In this case the redis service receives requests on the front-end on port 6379 and forwards it to the actual redis systems running in the backend in the form of PODs. That is what is defined in the service definition below.

The service is exposed through the cluster with the name of the service – “redis” in this case. The python code running in the voting-app connects to the redis service using this hostname. This can be seen in the source code of the voting-app. All services within a Kubernetes cluster can be accessed by any PODs or other services using the service names. The kube-dns component in the kubernetes architecture makes this possible.

In the source code below, the port 6379 is not specified, this is because the python-redis module/library uses 6379 as the port by default. As you may know already 6379 is the default port for redis. However, if you wish to, you could change the redis-service to listen on another port. In the below example, we make a few changes.

The service is now named “some-redis-service”. And the port it listens on is 7000. So we make the change in the source code on voting-app-pod accordingly. But remember the actual redis application still runs on 6379 on the redis-pod. So we still leave the target port on the service as 6379, because that’s were the requests should ultimately be forwarded to.